Preventing Autoscaler Flapping: Kubernetes HPA Tolerance in Depth

- Aug 26, 2025

- 3 min read

Background: Why Tolerance Matters in HPA

The Horizontal Pod Autoscaler (HPA) dynamically adjusts pod replicas based on actual metrics like CPU or memory utilization. To avoid erratic scaling due to minor metric fluctuations—known as “flapping” - Kubernetes applies a tolerance threshold: changes within ±10% of the target metric do not trigger scaling actions.

This built-in tolerance ensures stability, but it’s fixed cluster-wide and can't be customized per workload in versions prior to v1.33.

However, a fixed 10% tolerance can be problematic:

In large deployments, a 10% swing may represent tens or hundreds of pods.

Latency-sensitive workloads may need much more responsive scaling.

Other workloads may need more conservative scaling to avoid resource churn.

The Alpha Feature: Configurable Tolerance in Kubernetes v1.33

In Kubernetes v1.33, a new alpha feature — HPAConfigurableTolerance — was introduced. It allows per-HPA configuration of tolerance, separately for scale-up and scale-down scenarios.

Feature Highlights:

Alpha-only status in v1.33: Disabled by default, must be enabled via feature gate.

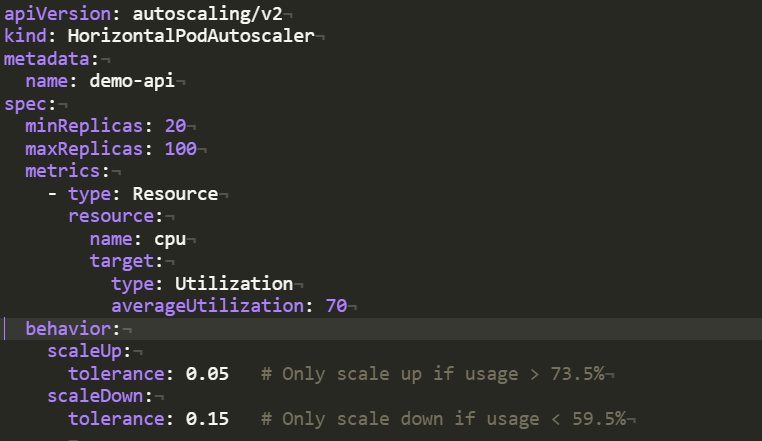

Applies under the autoscaling/v2 API version, specifically under the spec.behavior section:

spec.behavior.scaleUp.tolerance

spec.behavior.scaleDown.tolerance

Tolerance is a fractional value (0.0 to 1.0), representing a percentage deviation from the target.

With this setup, scaling is responsive on traffic spikes but conservative during drops.

Enabling the Feature

To use configurable tolerances in v1.33:

Enable the feature gate, both on kube-apiserver and kube-controller-manager:

--feature-gates=HPAConfigurableTolerance=trueUse the autoscaling/v2 API and include the tolerance fields under behavior.scaleUp and/or behavior.scaleDown

If not provided, HPA falls back to the existing 10% cluster-wide tolerance, which can only be overridden globally through the --horizontal-pod-autoscaler-tolerance flag on the controller manager—not via the API.

Under the Hood: How Tolerance Affects Scaling Logic

The HPA controller computes the desired replica count based on the ratio of current metric to target metric, then applies tolerance checks:

if math.Abs(1.0 - currentUtilization/targetUtilization) <= tolerance {

// Within tolerance; skip scaling.

return currentReplicas

}

When the difference exceeds tolerance, the scaling decision proceeds.

Implications of tuning tolerance:

Lower scaleUp tolerance → more responsive scaling at the cost of potential overshoot.

Higher scaleDown tolerance → reduces unnecessary scale-down events during temporary dips.

Best Practices & Real‑World Tips

Scenario | Recommended Tolerance Settings |

Latency-sensitive APIs | scaleUp.tolerance: 0 – 0.01 (respond fast) |

Cost-sensitive batch jobs | scaleDown.tolerance: 0.1 – 0.2 (avoid flapping) |

Balanced workloads | Fine-tune both for steady scaling behavior |

Combine with:

stabilizationWindowSeconds to smooth transitions and avoid back-and-forth scaling.

policies under behavior to control scaling rate (e.g., Pods or Percent policies).

Security note: Use this alpha feature cautiously in production—monitor closely and test thoroughly.

Roadmap: What’s Next?

According to GitHub KEP-4951 tracking:

Alpha in v1.33, targeted for graduation to beta in v1.34, and beyond.

Future improvements may include:

Per-metric tolerance (e.g., CPU vs memory),

Dynamic/automated tolerance adjustments based on historical patterns,

Deeper integration with vertical autoscaling (VPA), predictive scaling, etc.

Summary

In Kubernetes 1.33 (alpha), the HPAConfigurableTolerance feature enables per-HPA, per-direction tolerance tuning—empowering you to tailor autoscaling sensitivity precisely for each workload. This improvement bridges a long-standing gap by replacing the “one-size-fits-all” 10% tolerance with fine-grained control.

By enabling this feature and tuning tolerance values along with stabilization and scaling policies, you can achieve more responsive, stable, and cost-efficient autoscaling behavior. Keep an eye on its progress toward beta in v1.34 and full stabilization beyond.

Let me know if you'd like YAML templates, testing strategies, or performance tuning examples related to this feature—I’d be happy to help!

Ready to take your Kubernetes autoscaling to the next level?

At Ananta Cloud, we help platform teams simplify and optimize infrastructure operations—so you can scale intelligently, reduce costs, and keep your applications performing at their best.

🔹 Need help implementing advanced HPA strategies like configurable tolerances?

🔹 Looking to fine-tune autoscaling across environments with real-time observability and control?

Let’s chat→ Book a free consultation

Smarter scaling starts with smarter tooling. Get in touch with Ananta Cloud today.

Comments